It’s fascinating how neuroscience principles have not only enhanced the AI field but also benefited from it. For instance, neuroscience has been instrumental in confirming the effectiveness of existing AI-based models.

The study of biological neural networks has been a game-changer, leading to the creation of advanced deep neural network architectures. These developments have led to breakthroughs in areas like text processing and speech recognition.

Similarly, AI systems are proving to be a boon for neuroscientists, aiding them in testing hypotheses and analyzing neuroimaging data. This synergy is particularly valuable in the early detection and treatment of psychiatric disorders.

Moreover, these AI systems can interact with the human brain to control devices like robotic arms, which is a big step forward for people with paralysis.

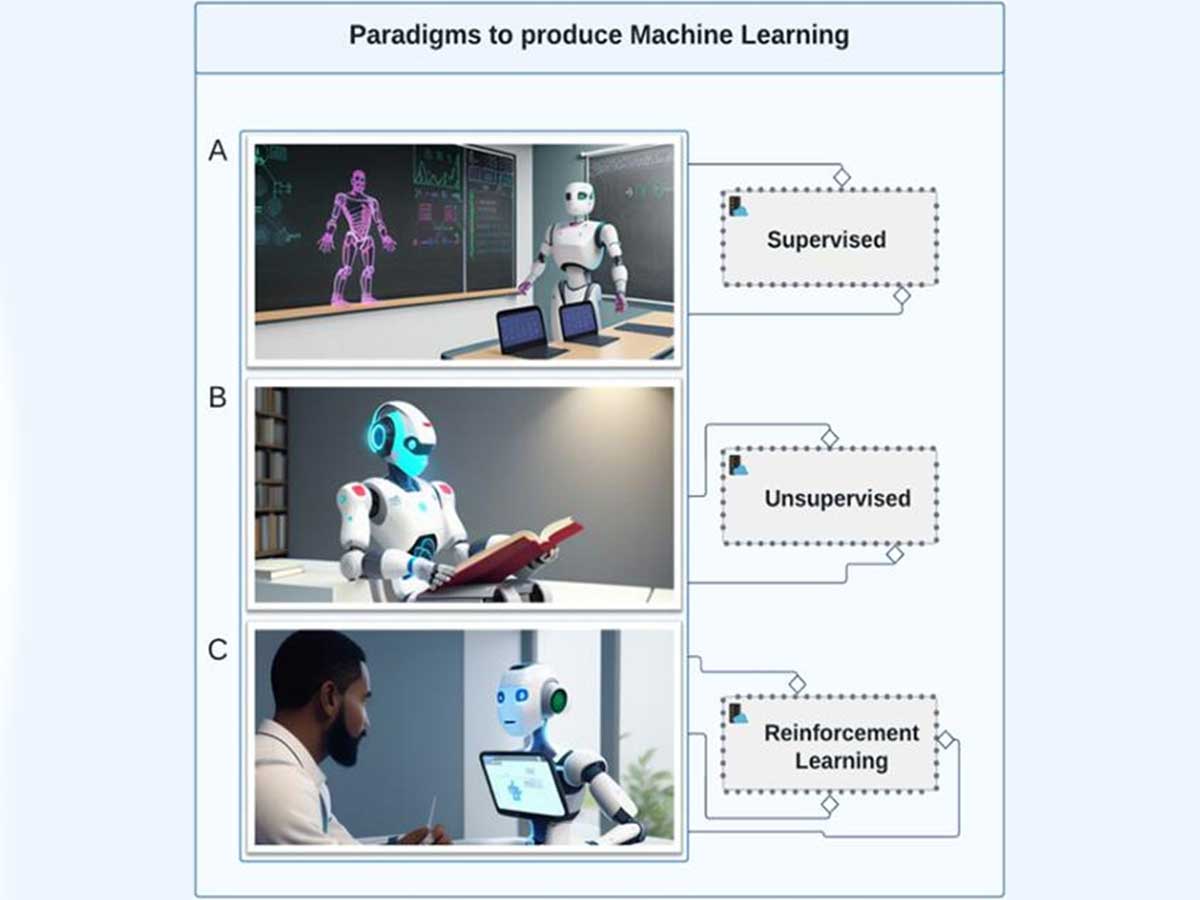

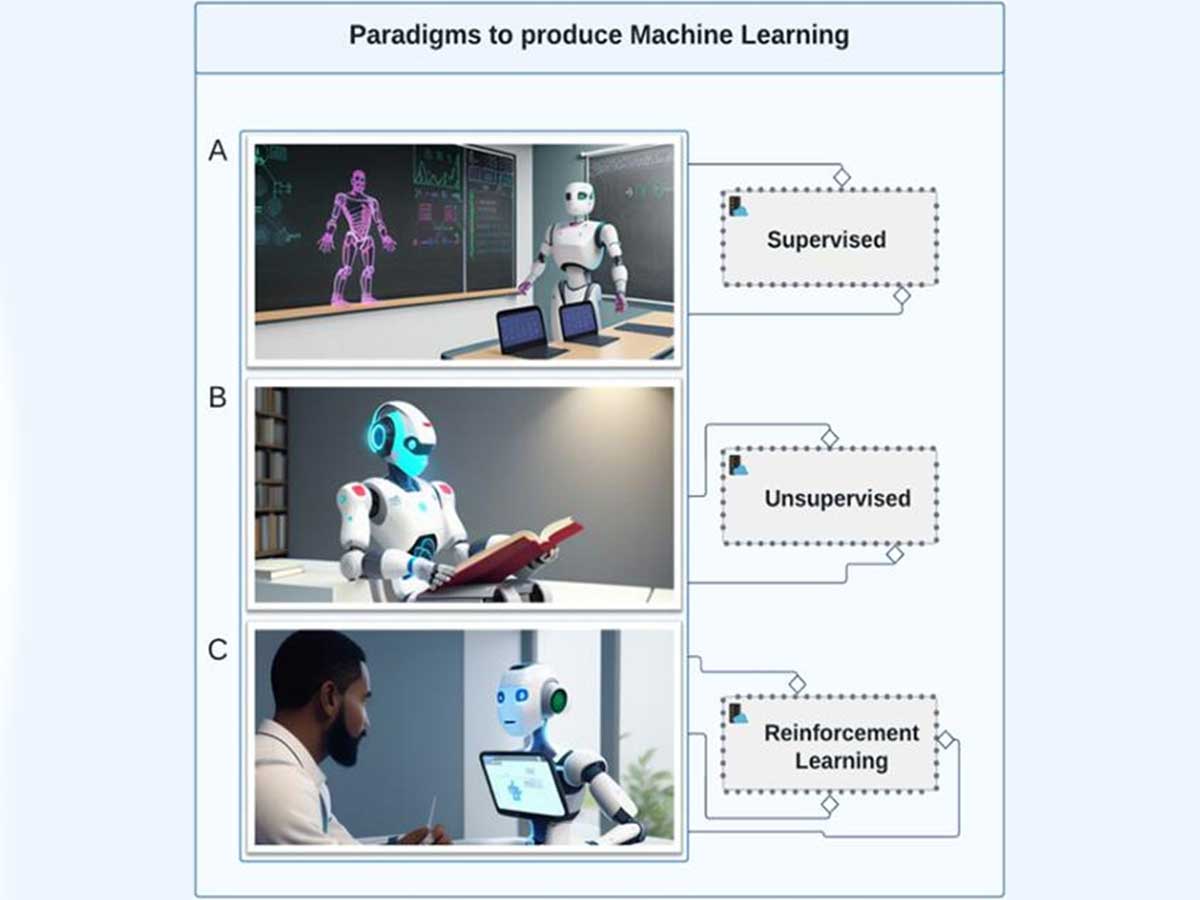

Computer science has also seen significant advancements by adopting reinforcement learning (RL) from human and animal models, enhancing the learning process in artificial systems without explicit instructions.

This approach has been successfully applied in diverse areas, including robot-based surgery and gaming applications. Overall, the use AI to dissect complex data and unearth hidden patterns makes it an ideal tool for neuroscience data analysis.

Before we talk about how AI is used to study neuroscience, let’s talk about Artificial intelligence.

Intelligent machines and machine learning

Back in 1955, John McCarthy first introduced us to ‘Artificial Intelligence’ as the craft of creating intelligent machines. Now AI is about machines showing more human-like intelligence to us humans but pinning down exactly what human intelligence is.

An expert group in 1997 tried to nail down a definition saying intelligence means being able to reason, plan, solve problems, think in the abstract, get the gist of complex ideas, and learn from experience.

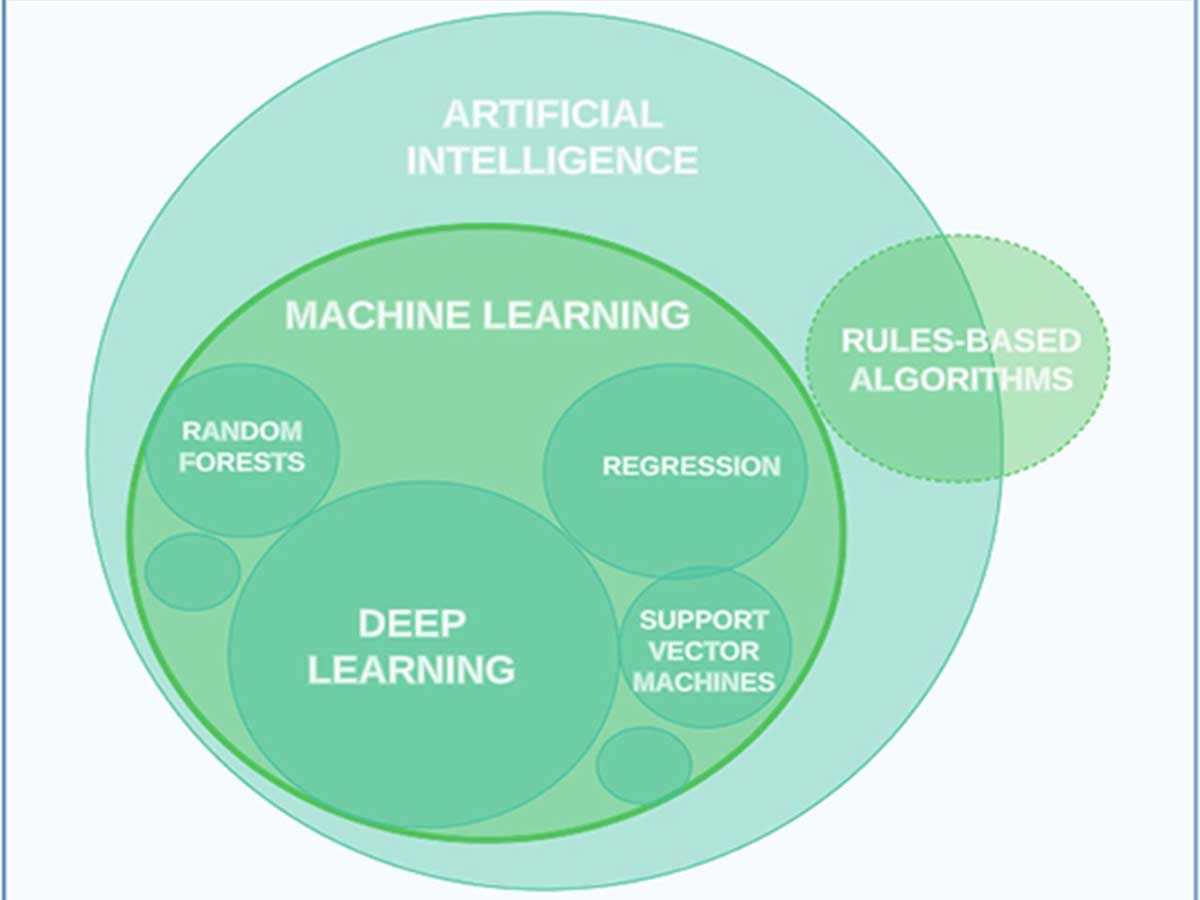

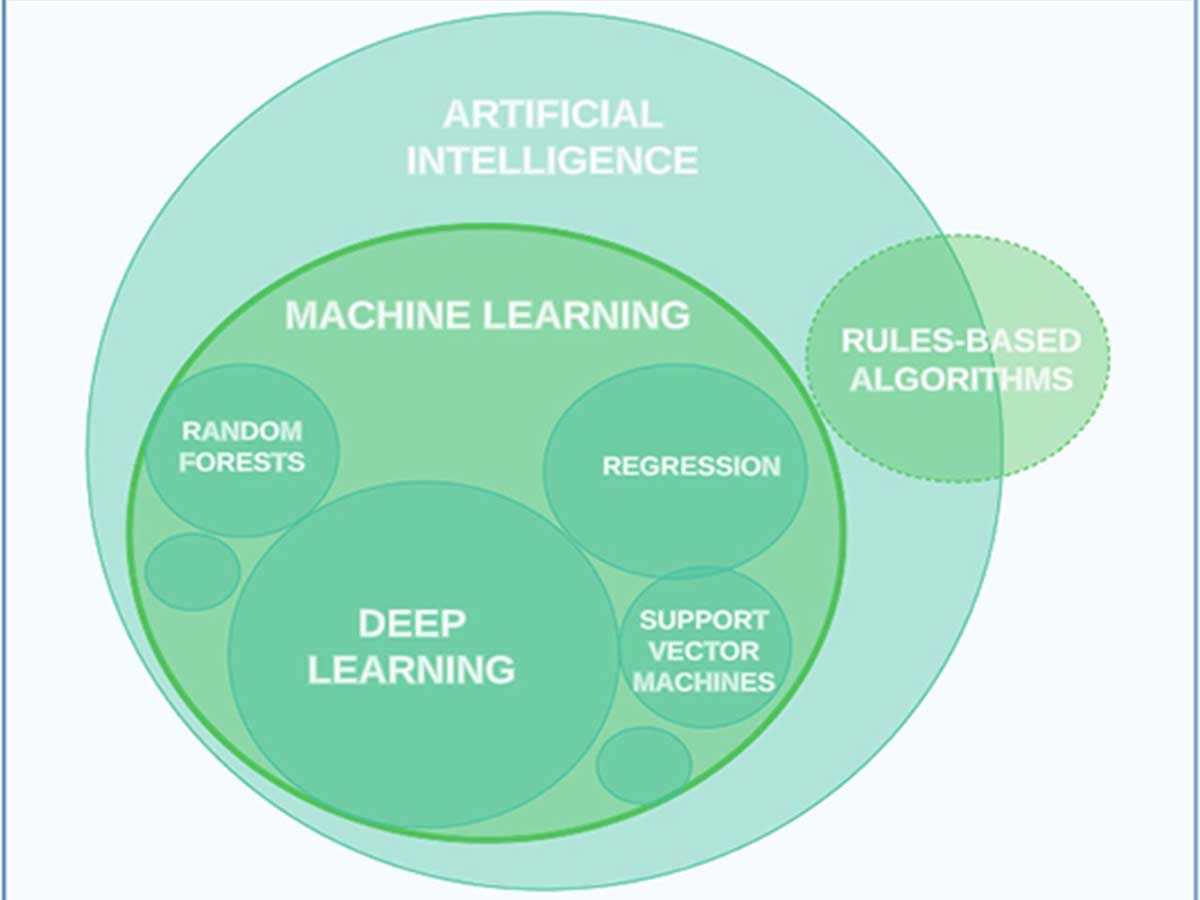

Back in the day, we had rules-based approaches like decision flow charts. You’ve probably seen these flow charts in smartphone apps or triaging software.

But most experts wouldn’t call these AI because they’re too predictable and can miss the mark by ignoring context. They’re like doctors’ protocols and algorithms for deciding on tests or treatments.

Sure, hospitals have loads of these in their manuals, but they’re not always used because they can be too complicated or just too much to handle.

Then, there was a shift to newer methods like machine learning and deep learning, which are more about probabilities, handling information processing, adapting responses and behavior based on what they’re fed, and way less about following a set script.

Machine learning, a part of AI, is all about teaching computer systems to learn from data and get better over time without someone having to constantly tweak the programming.

These machine learning algorithms can spot patterns and relationships in data and use this to predict things. They range from simple methods like logistic regression to complex deep learning algorithms.

Neuroscience inspired the design of Intelligence systems.

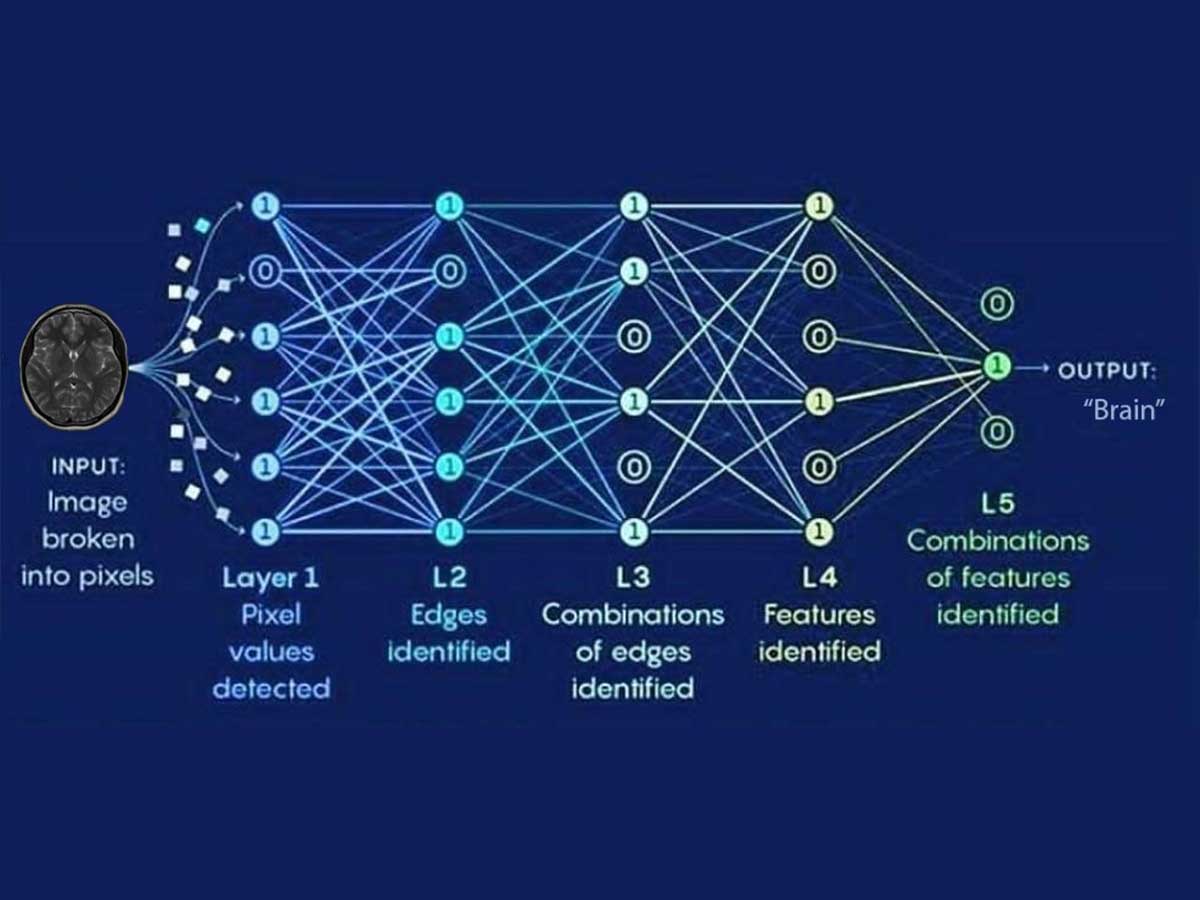

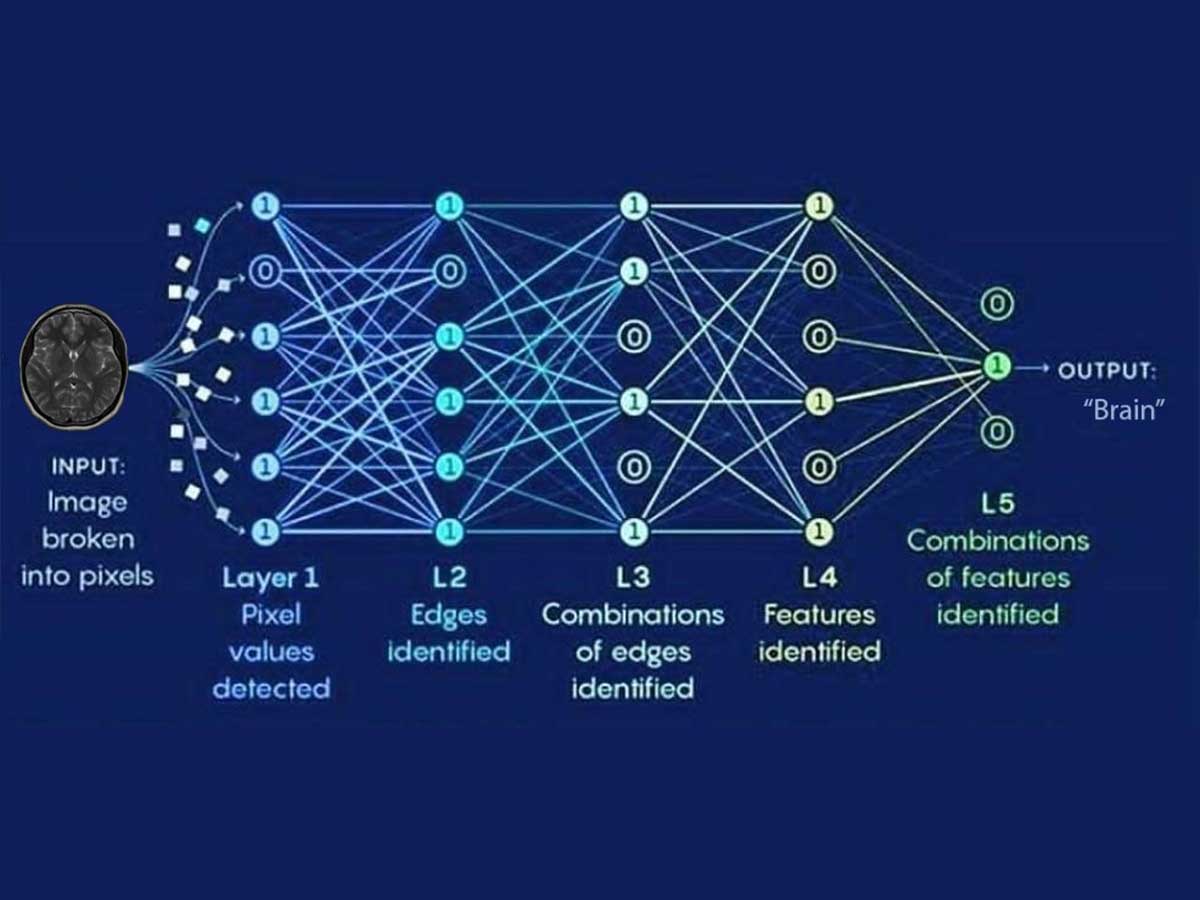

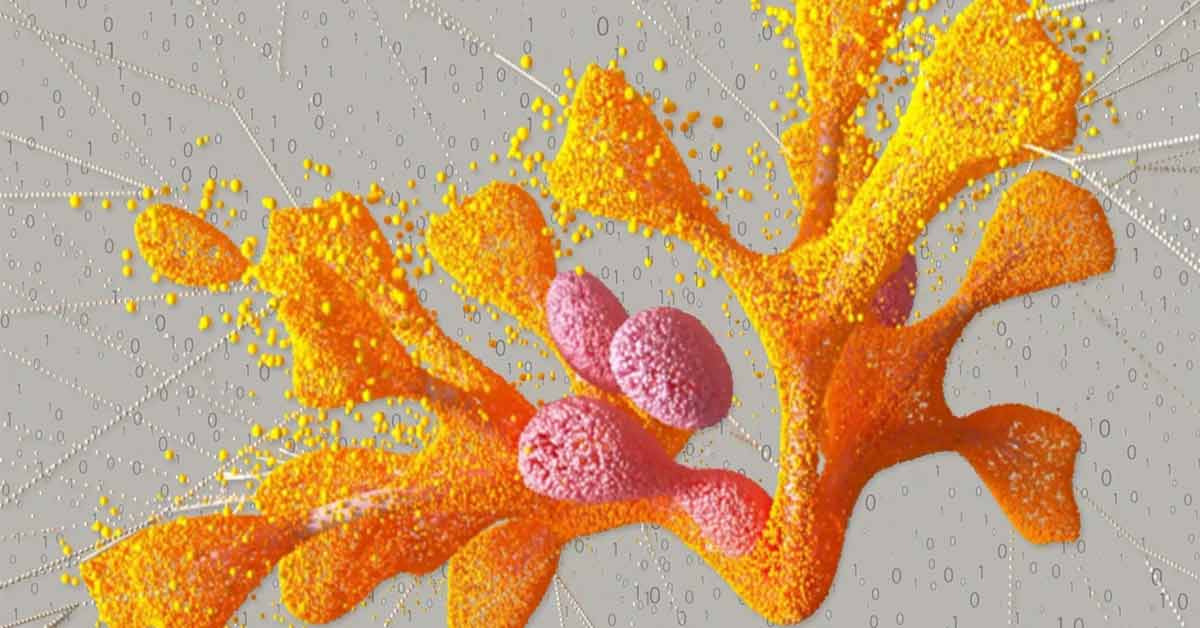

Neuroscience has been a huge inspiration for the design of AI systems. Think about how our human brain works with all those neurons. That’s pretty much how artificial neural networks (ANNs) operate, with loads of units working together. Back in the 1950s, Frank Rosenblatt created a simple ANN called a perceptron, inspired by Hebbian learning and the brain’s structure.

This concept evolved into perceptron networks and then into something more complex called multi-layer perceptron (MLP). In an MLP, the output from one layer gets passed on to the next, all the way to the end, until you get the result you’re after.

The way our brain’s working memory functions led to the design of recurrent neural networks (RNN). These are smart because they can use past results to figure out what comes next.

There’s even a special type of RNN called a long short-term memory (LSTM) network. These are perfect for handling tasks that need you to remember stuff for a while, like when you’re summarizing text.

Then, there’s the convolutional neural networks (CNN), which got their inspiration from the ventral visual stream in our brains. And in the world of AI, there’s this cool thing called reinforcement learning (RL), where a computer learns from its environment. It’s all about doing things that earn rewards and avoiding what gets you penalties.

Deep RL takes this further, using neural networks to map out the best actions based on the current situation. It’s great for recognizing sounds, text, and images, but man, does it use a lot of computer power.

That’s where spiking neural networks (SNNs) come in. They’re working more like our actual neurons and being way more energy efficient. These SNNs send electrical signals to other artificial neurons when they hit a certain threshold.

The Role of AI in Advancing Neuroscience Research

AI is playing a big role in neuroscience these days. It’s like having a high-tech helper to understand the brain and its challenges. For instance, there are AI-assisted brain computer/machine interface (BCI) applications that are a big deal for people with neuromuscular disorders like cerebral palsy or spinal cord injuries.

These applications are changing lives by helping people regain control of their bodies. Take BrainGate, for example. It’s a brain implant that lets users move their limbs just by thinking about it, which is pretty awesome for people needing prostheses.

Then, there’s diagnosing brain issues like meningitis. This infection can be tough to pin down because it shows up in so many different ways. But here’s where AI shines.

It uses all sorts of data, like the number of neutrophils and lymphocytes in your cerebrospinal fluid (CSF), even the neutrophil-to-lymphocyte ratio (NLR), to figure out what type of meningitis a person might have. And it’s super accurate!

When we look at neuro-oncology that’s another area where AI is making a big difference. It’s not just about diagnosing brain cancers more precisely; it’s also about figuring out the best therapeutic options.

Understanding brain function and neurological disorders is very important. Especially, understanding brain data in places like the visual cortex or for tasks like understanding structures and cognitive functions could affect the advancement of neuroscience even in building learning systems and general intelligence systems.

Let’s not forget the end of the day, this research work might help to build AGI, and studying neurons in the human brain will be the key to emulate human intelligence

Possibility of AI Replacing Neurologists?

In a recent article, we talk about how Artificial intelligence systems might take over jobs, especially since past tech advances mainly hit blue-collar jobs. But now, even white-collar jobs seem up for grabs. However, the worry about robots stealing jobs seems overblown for neurologists and other clinicians.

A neurologist’s work is complex, and It includes diagnostic and multimodal interpretation, clinical decision-making, procedural tasks like giving meds or doing lumbar punctures, and, crucially, human communication – things like sharing tough news or explaining treatments.

Plus, there are a bunch of related tasks like administration, para-clinical activities, and research and education.

Each task involves a mix of thinking, talking, and doing things that are challenging to split up and automate. So, even if Artificial intelligence can do a couple of steps, it’s a long shot to think it could do everything a neurologist does.

Instead, Artificial intelligence might change how neurologists work, helping with parts of the job. It could take over some non-core activities, like cutting down paperwork, interpreting tests, or filling out forms.

A great example is voice dictation for letters. It’s gone from personal dictation to AI-powered dictation, easing the load on medical secretaries but not replacing them entirely. Most clinical Artificial intelligence systems are made to help, not replace, human doctors.

While Artificial intelligence aims to make healthcare more efficient, neurologists are more about personalized care. This is tricky in clinical neurology, where skills and experience are key, and things like the neurological exam can vary a lot in accuracy.

For instance, a study on diagnosing lumbosacral radiculopathy through neurological exams showed mixed results in accuracy. That’s why machine learning and feature-ranking algorithms might not rely much on traditional exam findings, which can be inconsistent.

Instead, they use more standardized scales like NIHSS, Rankin, ADAS-Cog, MoCA, and MMSE to grade symptoms rather than diagnose them.

A Brain Analogue: Dealing with Data and Reproducing Senses

Artificial neural networks are like a digital version of our brain’s neurons. Nodes in these networks act like brain cells, linked by mathematical weights similar to synapses. When these systems learn, they adjust these weights to get closer to the right answer. Think of a deep neural network as a more complex version with hidden layers.

A great example is London-based Artificial intelligence research company DeepMind Technologies, now part of Google’s parent company, Alphabet. They used such a network to create the computational AI model that outplayed a human at Go in 2015.

It’s important to remember, though, that these networks are just a rough analogy of how our brains work. Yet, they’ve been super helpful in studying the brain. Artificial intelligence techniques are not just for making models; they’re great for crunching massive amounts of data.

Like with functional magnetic resonance imaging, which gives us brain activity snapshots. Artificial intelligence speeds up analyzing these data heaps.

Take the work of Daniel Yamins at the Wu Tsai Neurosciences Institute at Stanford University. He and his team trained a deep neural network to predict a monkey’s brain activity when it recognizes objects.

They got about 70% accuracy in matching network areas to brain areas. In 2018, they did something similar with the auditory cortex, creating a network that could pick out words and music genres from short clips as accurately as a human.

But it’s not just about building these systems; it’s about testing hypotheses too. Artificial intelligence systems that can imitate human behavior without ethical concerns give scientists new ways to explore the brain.

Examples: AI is being tested in neurology.

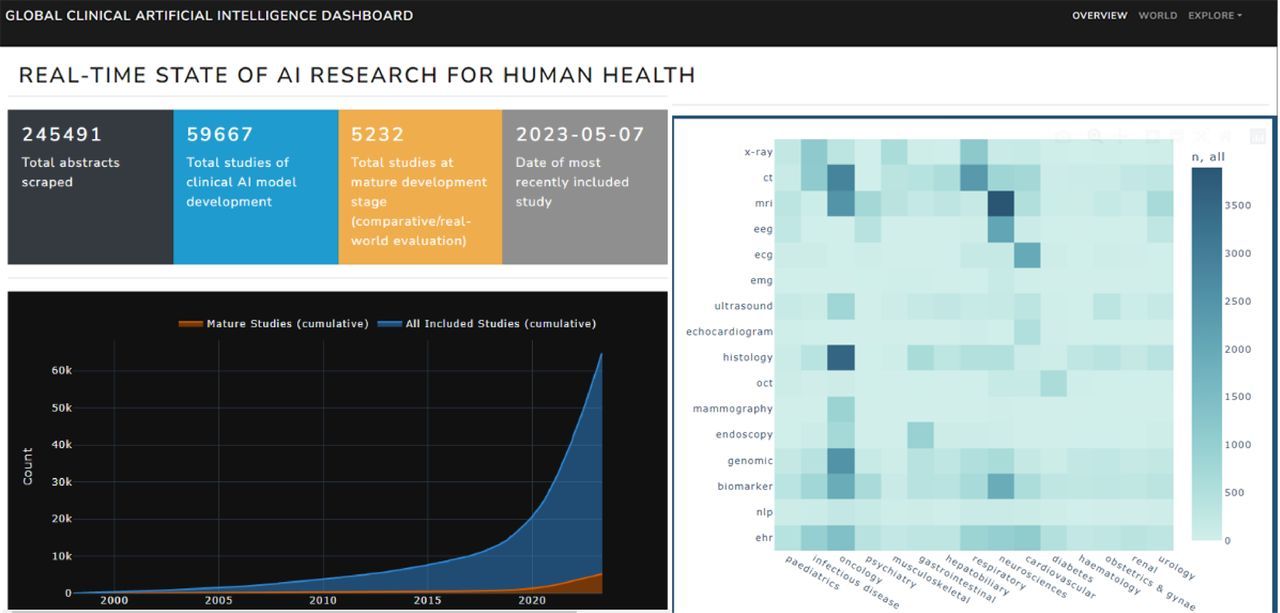

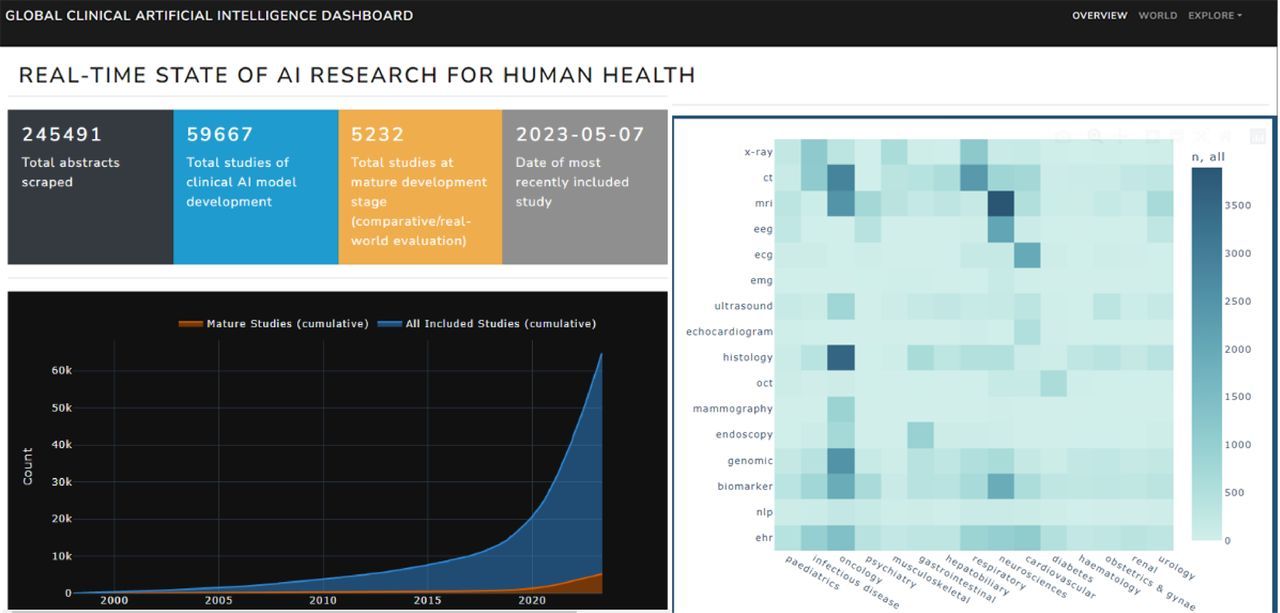

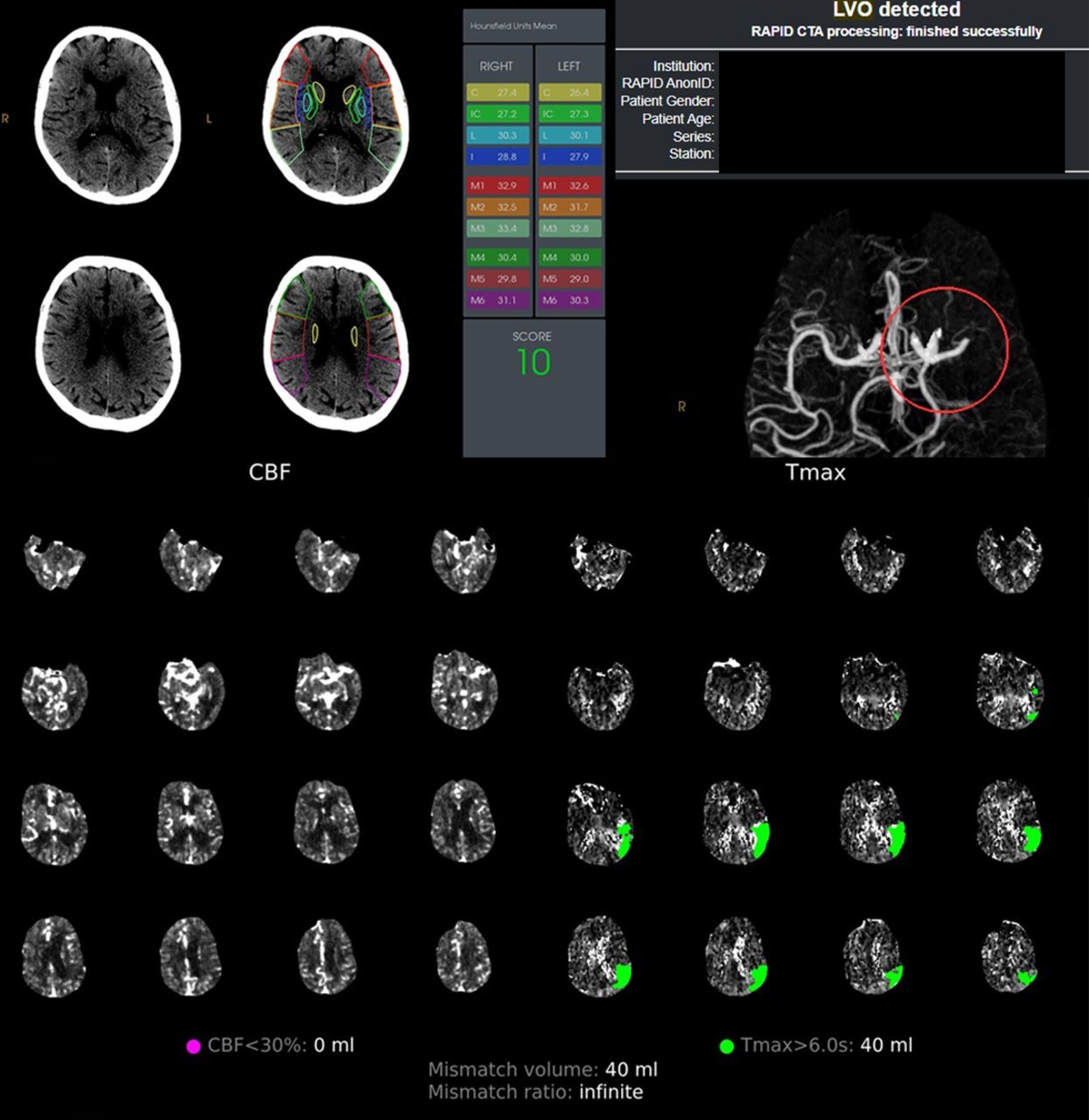

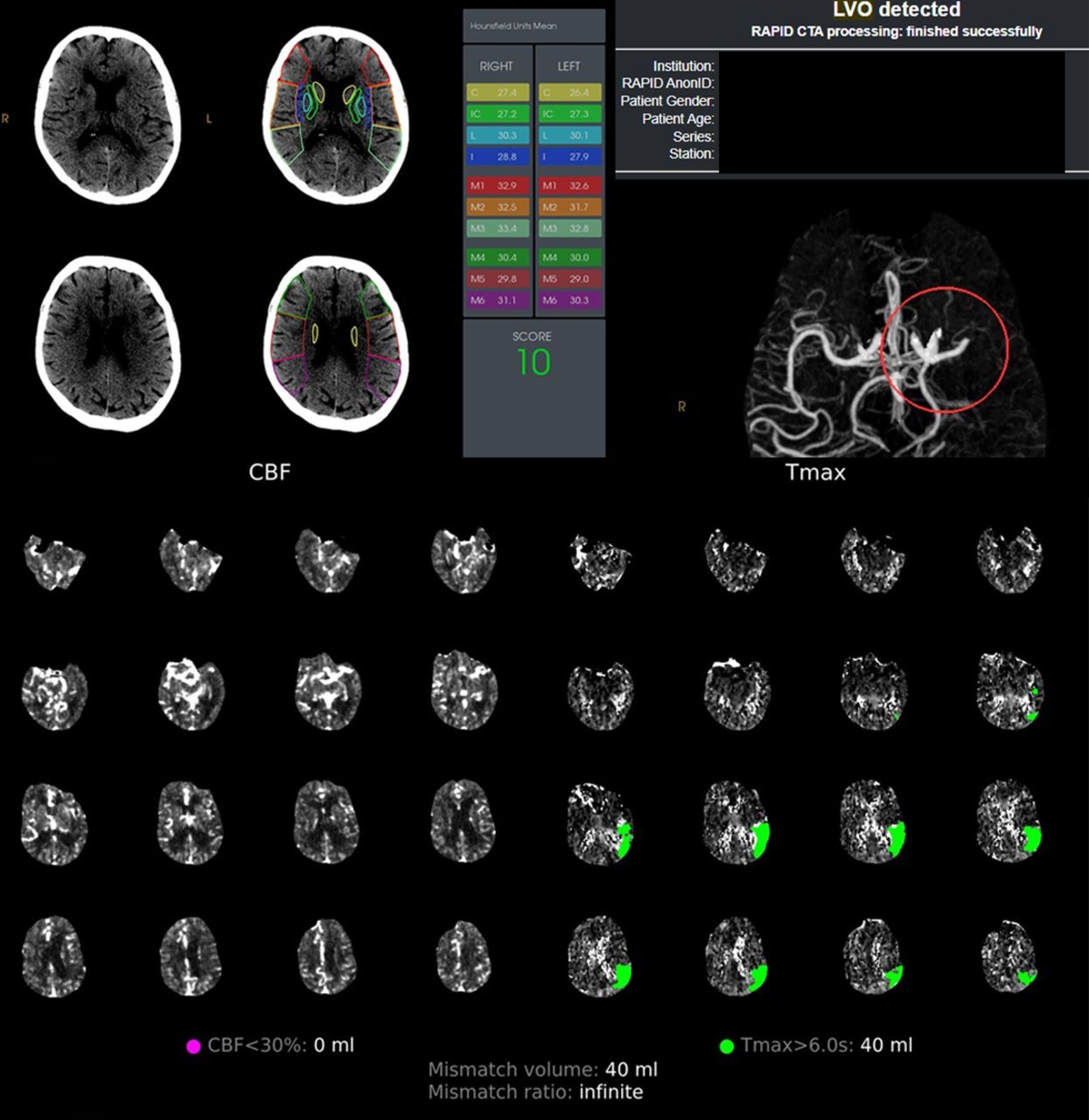

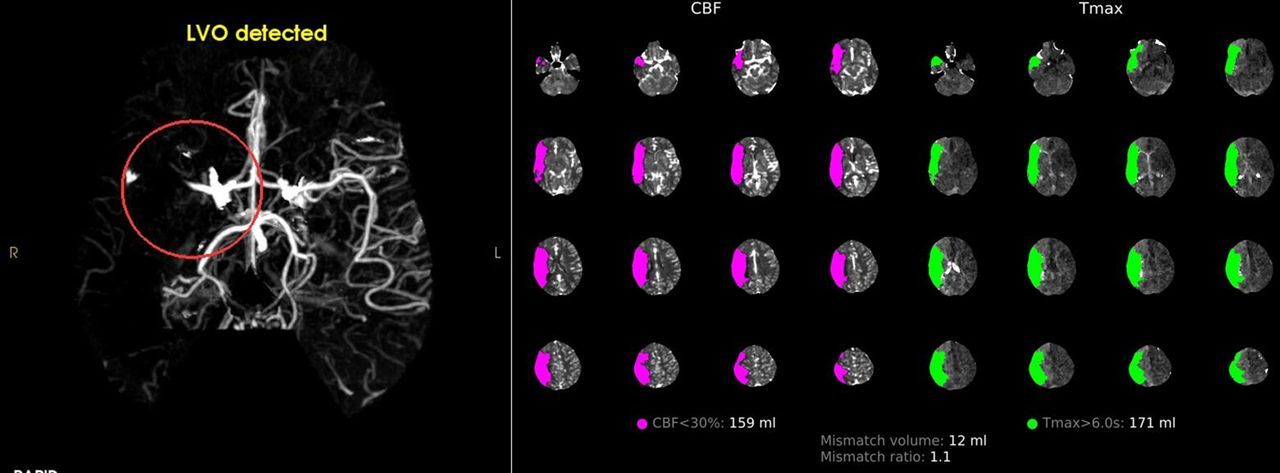

Artificial intelligence is making big strides in neurology, especially in computer vision and natural language processing. One of the earliest applications is the AI-supported analysis of stroke imaging.

This includes detecting changes on CT scans, like ASPECTS scores for ischaemic changes, spotting hemorrhages, and figuring out if there’s a large-vessel occlusion. It’s also used for checking the ischaemic penumbra on CT-perfusion imaging.

Thanks to tools like Rapid. Artificial intelligence, Brainomix, and Viz.ai, UK hospitals are speeding up their response times for stroke patients.

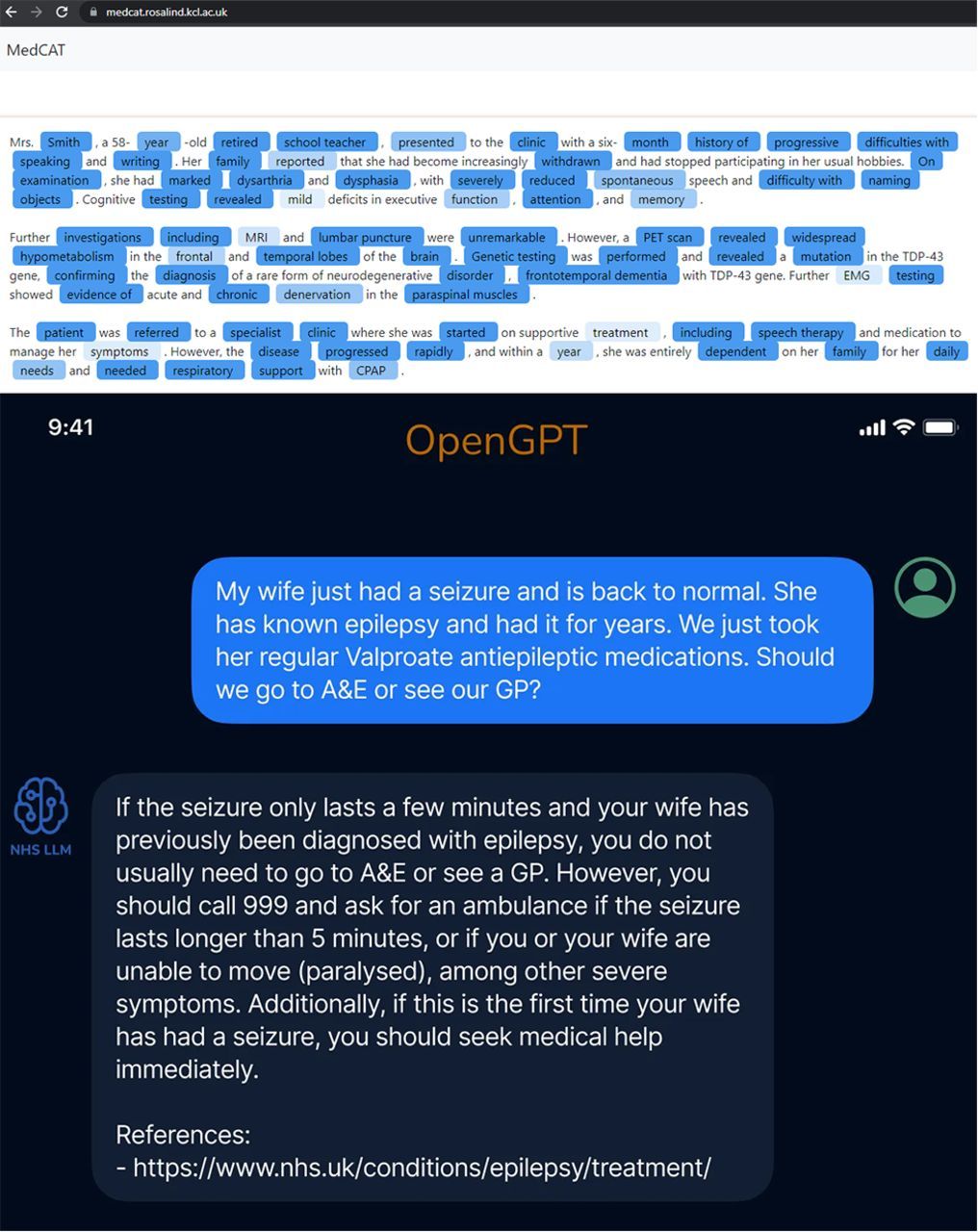

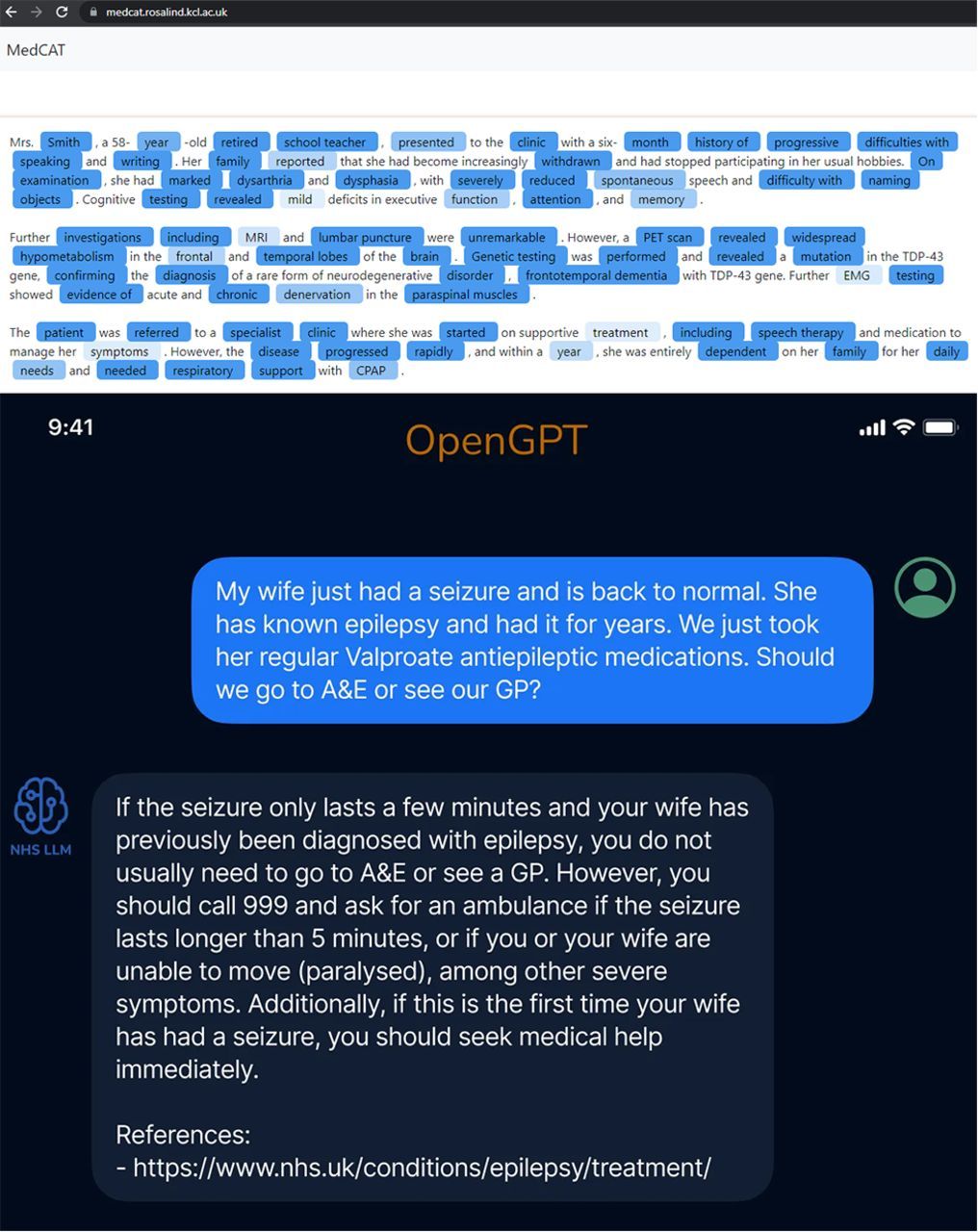

General anomaly detection algorithms are also in play, helping neuroradiologists spot unusual images quickly. In electronic health records, machine learning is busy with tasks like predicting outcomes, classifying diagnoses, and even risk scoring, much like traditional clinical calculators.

Natural language processing is a game-changer in healthcare, aiding in everything from letter writing to summarizing patient info. It’s also being used for more complex tasks like clinical coding and disease trend analysis, thanks to groups like NHS’s CogStack.

Artificial intelligence even supports genomic sequencing efforts, helping neurologists pinpoint patients who might benefit from genetic sequencing. It’s also being used to find specific patient groups for various treatments or studies.

Artificial intelligence is proving its worth in healthcare records by detecting diagnostic codes and helping keep problem lists standardized and up-to-date.

Voice analysis algorithms are another exciting development, offering documentation assistance and even detecting diseases like Parkinson’s and Alzheimer’s through speech patterns.

Then there’s the world of video, movement, and remote evaluations. Artificial intelligence is being tested for its ability to analyze tremors, seizures, and other movement disorders, potentially revolutionizing how these conditions are monitored.

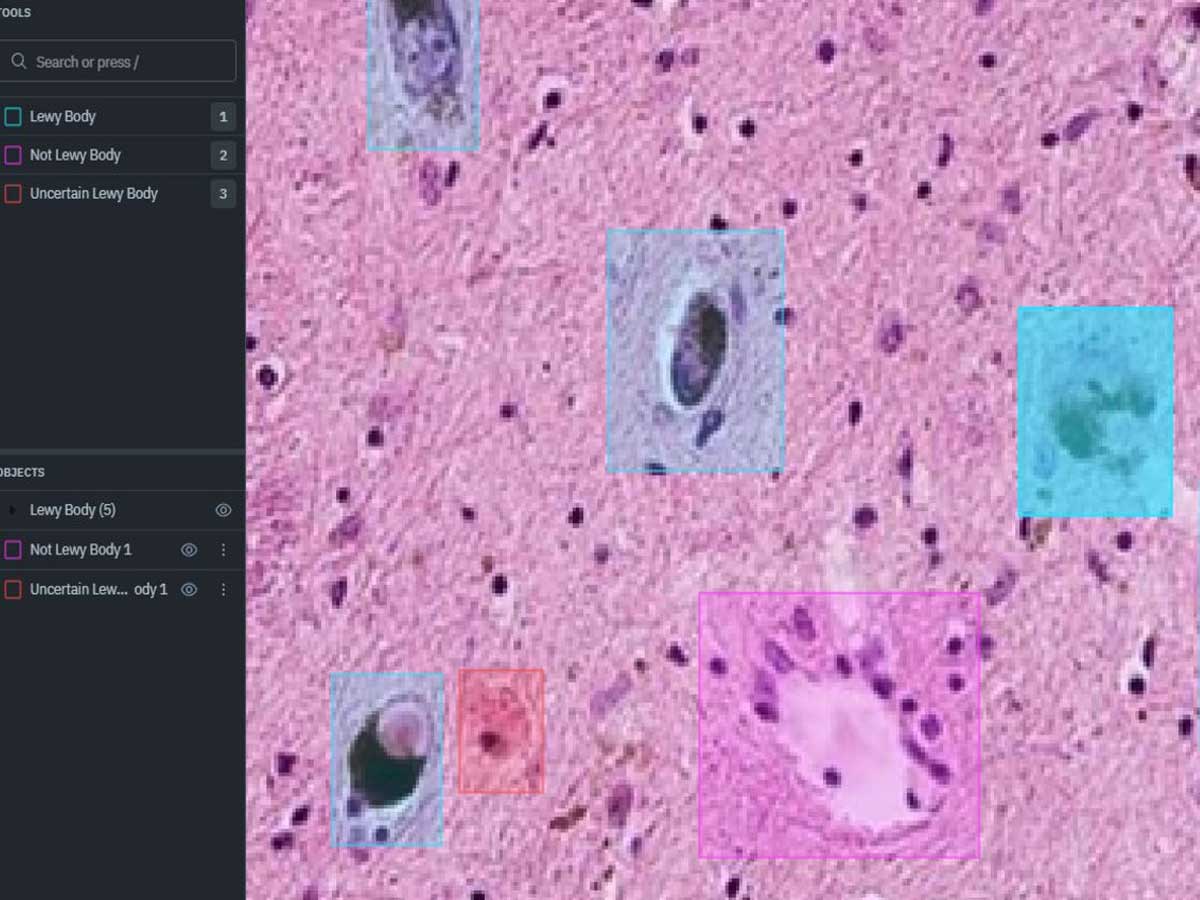

In neurophysiology, machine learning is automating the detection of seizures in EEG recordings and helping develop brain–computer interfaces. Histopathology isn’t left out either, with Artificial intelligence playing a role in the automated analysis of brain tumor slides, identifying specific pathological features.

Finally, Artificial intelligence is speeding up the interpretation of genomic and proteomic data in biomarker discovery and proteogenomics. For instance, Google Deepmind’s Alphafold model is a breakthrough in predicting protein folding. AI’s role in synthetic chemistry and in identifying biomarkers in conditions like high-grade glioma and amyotrophic lateral sclerosis is just the beginning of its impact on future therapies and medicine.

We have already talked about several incidents and examples of Artificial intelligence improving healthcare, like Medical AI Chatbots that do exist, like Med-PaLM 2 powered by Google DeepMind, Microsoft’s AI Therapist, and how bigger tech titans are already joining this AI and health game.

Like Priscilla Chan and Mark Zuckerberg, with their Chan Zuckerberg Initiative (CZI). So, as always, these new technologies are here to stay whether we like it or not, so we have to understand and evolve accordingly.