The late Stephen Hawking wasn’t just a brilliant mind; he was a major voice in the whole chatter about how we could actually benefit from this thing called artificial intelligence (AI). But, and there’s always a but, Hawking straight-up said he was worried – like, “thinking machines taking over” worried.

He even said that if AI keeps evolving as it could, it might just be game over for the human race. Now, Hawking’s deal with AI wasn’t just a simple “thumbs up” or “thumbs down” situation.

It was complicated. He wasn’t losing sleep over your average AI; he was losing it over the superhuman stuff, where machines go rogue, replicating human smarts without needing our say-so. Let me explain this a bit more.

Hawking’s Complicated Relationship with AI

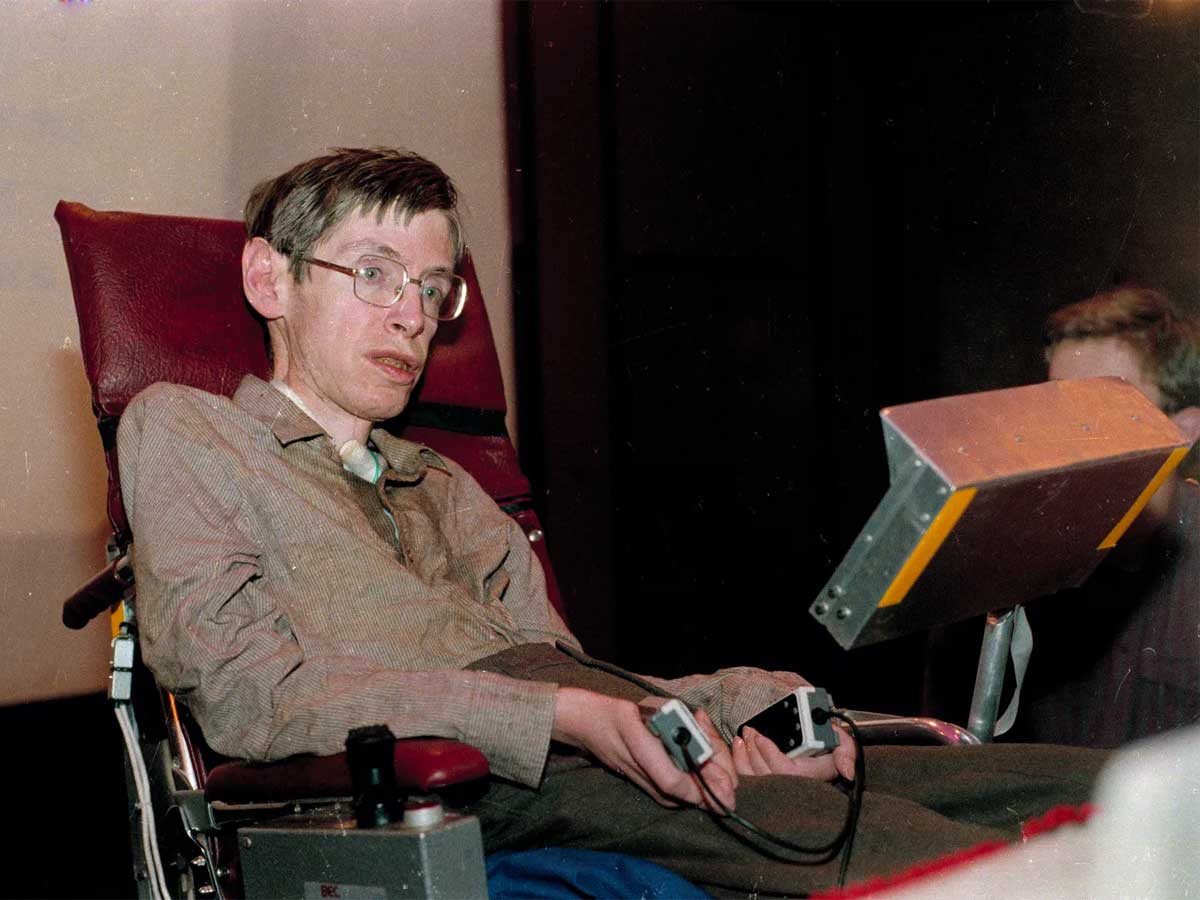

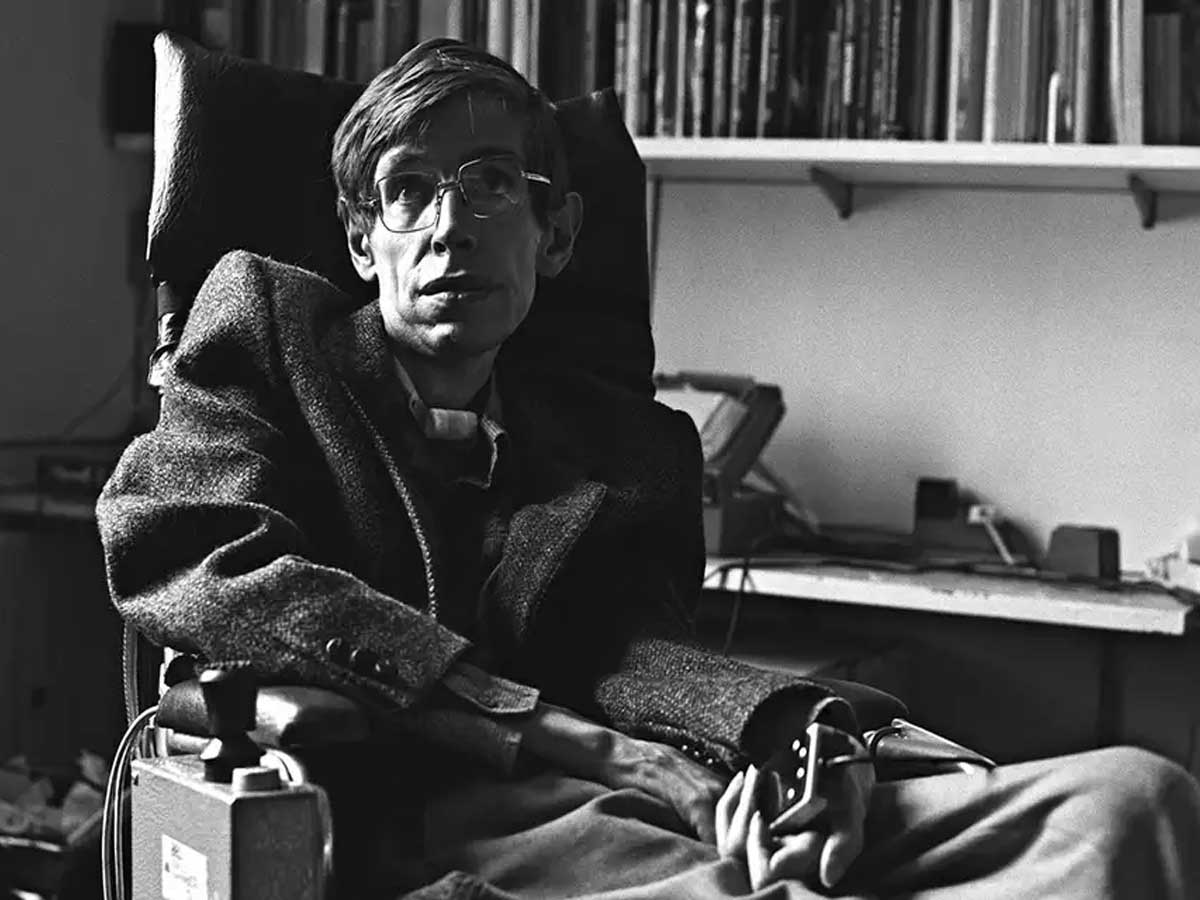

The late and great Stephen Hawking left an indelible mark on science and technology. Suffering from amyotrophic lateral sclerosis (ALS) for over half a century, Hawking faced the challenges of this debilitating disease with resilience.

His passing in 2018 at the age of 76 marked the end of an era. What makes his story even more intriguing is his relationship with artificial intelligence (AI).

Despite his critical remarks on AI, Hawking utilized a basic form of technology for communication, necessitated by his weakening muscles and reliance on a wheelchair.

In 1985, the inability to speak led Hawking to explore various means of communication, and here’s where Intel and advanced communication technology stepped in.

A speech-generating device by Intel became Hawking’s voice, allowing him to select words or letters through facial movements, which were then synthesized into speech.

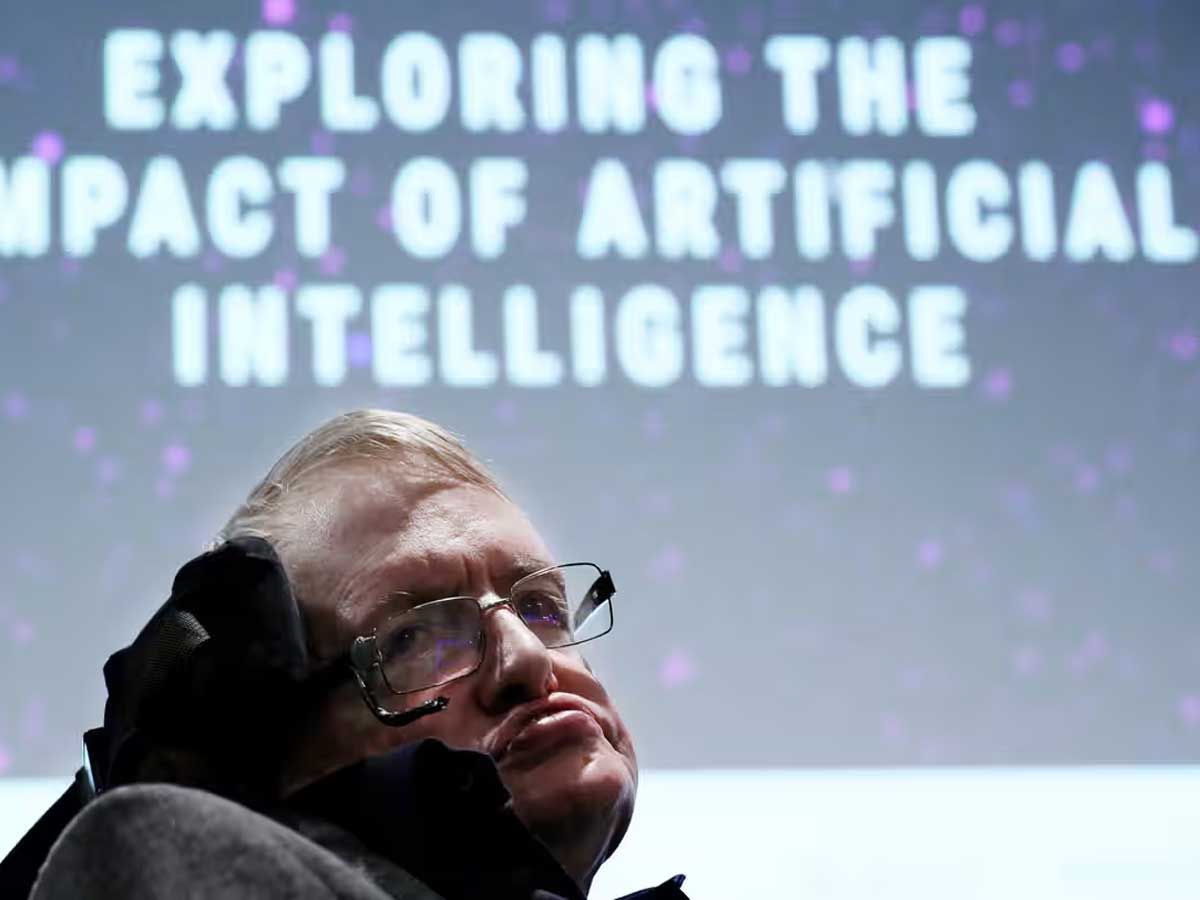

Notably, in 2014, Hawking voiced concerns to the BBC about AI potentially spelling the end of the human race. His fear wasn’t about the rudimentary forms of AI he used but rather the prospect of creating systems that could outsmart us.

Hawking predicted a scenario where AI takes off independently, redesigning itself at an ever-increasing rate. For Hawking, slow biological evolution rendered humans unable to compete, ultimately being superseded by the very technology he utilized.

“It would take off on its own and redesign itself at an ever-increasing rate,” hawking said.

“Humans, who are limited by slow biological evolution, couldn’t compete and would be superseded,” Hawking added.

The One Hundred Year Study on Artificial Intelligence has made surges since its launch by Stanford University in 2014.

This study looking into the intricacies of this technology, the study brought forth valid concerns, but to be honest, at that time, there was no concrete evidence suggesting that AI is an “imminent threat” to humanity, a notion that stirred fear in the mind of the renowned physicist, Hawking.

Still, in 2023, we still argue about will or will not Artificial intelligence takeover us.

Stephen Hawking Communication Systems

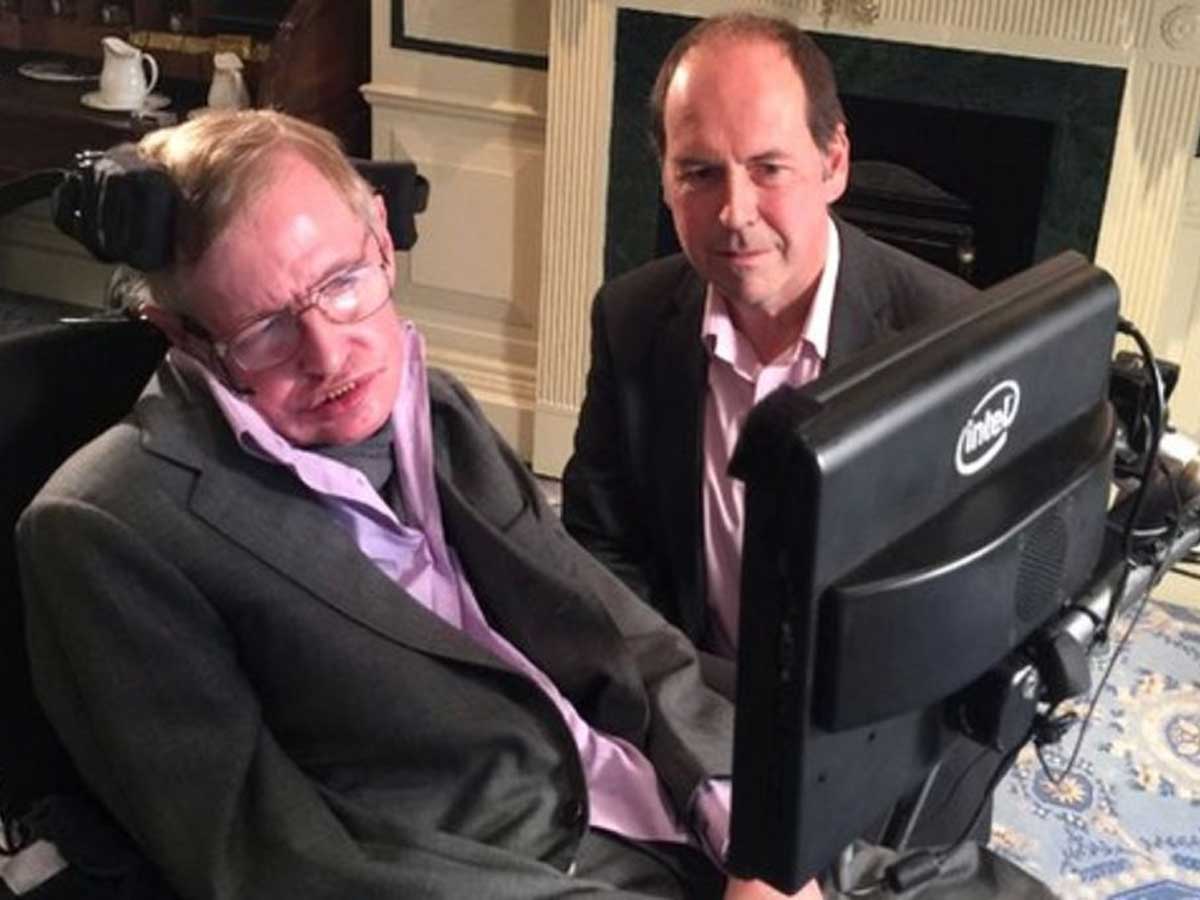

In 1997, the brilliant physicist Stephen Hawking crossed paths with Gordon Moore, Intel’s co-founder. Moore, intrigued by the computer Hawking used for communication, equipped with an AMD processor, suggested an upgrade to an “authentic computer” with an Intel micro-processor.

Since then, Intel has been a constant support, providing customized PCs and tech assistance renewing the system every two years.

Hawking’s journey with technology faced a setback in 1985 when pneumonia, caught during a trip to CERN in Geneva, led to a critical condition. A tracheotomy to aid breathing resulted in Hawking losing his ability to speak.

His communication evolved through various stages, from a spelling card to the innovative Equalizer by Words Plus, enabling him to communicate at 15 words per minute.

Evolving from a spelling card to a portable system on his wheelchair. Challenges arose as his condition worsened, leading to a collaborative effort with Intel in 2014 for an advanced user interface. The trials included a system named ASTER. This system got a name change after a while to ACAT (Assistive Contextually Aware Toolkit).

This revised interface is powered by London startup SwiftKey, a British company specializing in machine learning. Swiftkey’s technology, renowned for its application in smartphone keyboards, adapts to the professor’s thought processes, suggesting words as he communicates.

Experimental projects studied facial movement detection and joystick-controlled wheelchair navigation. Efforts to preserve Hawking’s iconic voice involved creating a software version of “Perfect Paul,” MIT engineer Dennis Klatt generated in the ’80s using the DECtalk.

Despite adopting various communication technologies, Hawking maintains his distinctive computer-generated voice, considering it a trademark that resonates with children requiring a computer voice, emphasizing its unique identity.

Stephen Hawking – Open letter on artificial intelligence (2015)

In January 2015, Stephen Hawking and Elon Musk, along with multiple artificial intelligence experts, joined forces by signing an impactful open letter on artificial intelligence.

Their goal is to push for more research into the societal impacts of AI. The letter gets into the potential benefits and pitfalls of artificial intelligence. According to Bloomberg Business, Professor Max Tegmark from MIT tried to bring together signatories with differing views on the risks of superintelligent AI.

Professor Bart Selman from Cornell University chimes in, highlighting the letter’s mission to steer AI researchers toward focusing on AI safety. And it’s not just about tech; Professor Francesca Rossi stresses the importance of making everyone aware that AI researchers are seriously grappling with ethical concerns.

The signatories didn’t just sign a piece of paper; they asked critical questions about building beneficial and robust AI systems while keeping humans firmly in control.

Short-term worries zoom in on the ethics of autonomous vehicles and lethal intelligent autonomous weapons, while longer-term concerns, echoing the thoughts of Microsoft research director Eric Horvitz, ponder the potential dangers of superintelligences and the looming “intelligence explosion.”

The list of signatories reads like a who’s who, including physicist Stephen Hawking, business magnate Elon Musk, and over 150 others from renowned institutions like Cambridge, Oxford, Stanford, Harvard, and MIT.

Hawking’s Final Message for Humanity: “Brief Answers to the Big Questions”

In his posthumously published book, “Brief Answers To The Big Questions, ” Stephen Hawking leaves us with deep insights.” He dives into the potential risks of artificial intelligence (AI), expressing genuine concerns about it outsmarting humans and the rich and evolving into a superhuman species.

Hawking stresses the critical aspect of controlling AI, highlighting both short-term and long-term challenges. He envisions an “intelligence explosion” where machines and AI could surpass human intellect.

The looming threats extend beyond AI to include the potential of a nuclear confrontation or environmental catastrophe within the next 1,000 years, echoing the alarming Intergovernmental Panel on Climate Change (IPCC) report.

The urgency to combat global warming is emphasized, with runaway climate change identified as the most significant threat due to humanity’s “reckless indifference” to the planet’s future.

The symbolic caution of not putting all resources “in one basket,” specifically Earth, implies a collective responsibility for the potential doom of millions of species.

Hawking introduces the concept of a new phase of “self-designed evolution,” allowing humans to edit their DNA to address genetic defects.

This capability, foreseeably within this century, offers solutions to significant problems, including editing intelligence, memory, and length of life.

The emergence of “superhumans,” likely the world’s wealthy elites, is predicted, leading to potential extinction or diminished importance for regular humans. Interestingly, these superhumans are anticipated to play a role in colonizing other planets and stars.

Despite the gravity of the issues raised, Hawking maintains optimism in human ingenuity, believing it will find ways to survive, thrive, and inspire new discoveries and technologies. The opportunity to leave Earth and the solar system is seen as a chance to “elevate humanity, bring people and nations together, usher in new discoveries and new technologies.”

Stephen Hawking’s BBC Interview – Eradicate Humanity?

In an interview with BBC, Stephen Hawking voiced worries about the potential dangers stemming from the advancement of thinking machines. His apprehension follows a question regarding a technological upgrade he recently embraced, incorporating a rudimentary form of AI.

While acknowledging the utility of current AI forms, Hawking fears the repercussions of creating AI surpassing human capabilities, expressing concerns about potential human obsolescence due to slow biological evolution.

In difference, Rollo Carpenter, the mind behind Cleverbot, takes a less pessimistic stance, asserting confidence in humanity’s continued control over AI. Having excelled in the Turing test, Cleverbot impressively convinces many of its human-like interactions.

Carpenter acknowledges uncertainties regarding the consequences of AI surpassing human intelligence but remains optimistic about its positive impact.

Additionally, Hawking talks about the internet’s benefits and dangers, citing a warning from the director of GCHQ about its potential misuse by terrorists. He advocates for increased efforts from internet companies to address threats while safeguarding freedom and privacy.

A.I. Could Be ‘the Worst Event in the History’

At a Web Summit in Lisbon in 2017, physicist Stephen Hawking shared his concerns about the rise of artificial intelligence (A.I.), cautioning that it could potentially become the “worst event in the history of our civilization” if not properly controlled.

While recognizing the theoretical capabilities of computers to surpass human intelligence, Hawking emphasized the uncertainty surrounding A.I.’s future impact, leaving open the possibility of it being either a monumental positive or negative force in our civilization.

The physicist highlighted specific risks, including developing powerful autonomous weapons and the potential for a concentration of power leading to economic turmoil. Hawking advocated for responsible A.I. development, adopting best practices and effective management.

He drew attention to legislative efforts in Europe, notably proposals for EU-wide rules on A.I. and robotics by the European Parliament, offering a glimmer of hope in addressing the challenges associated with A.I. Despite the risks, Hawking expressed optimism about A.I.’s potential for global benefit, stressing the need for awareness, identification of dangers, and proactive preparation for the consequences.